NEW – Klaus Schwab’s World Economic Forum proposes to automate censorship of “hate speech” and “disinformation” with AI fed by “subject matter experts.”

Despite this article being labeled “an opinion” from a “wide array of voices”, it is probably a more likely true than not that the WEF favors controlling and monitoring our online speech through the use of enhanced artificial intelligence.

With 63% of the world’s population online, the internet is a mirror of society: it speaks all languages, contains every opinion and hosts a wide range of (sometimes unsavoury) individuals.

As the internet has evolved, so has the dark world of online harms. Trust and safety teams (the teams typically found within online platforms responsible for removing abusive content and enforcing platform policies) are challenged by an ever-growing list of abuses, such as child abuse, extremism, disinformation, hate speech and fraud; and increasingly advanced actors misusing platforms in unique ways.

The solution, however, is not as simple as hiring another roomful of content moderators or building yet another block list. Without a profound familiarity with different types of abuse, an understanding of hate group verbiage, fluency in terrorist languages and nuanced comprehension of disinformation campaigns, trust and safety teams can only scratch the surface.

A more sophisticated approach is required. By uniquely combining the power of innovative technology, off-platform intelligence collection and the prowess of subject-matter experts who understand how threat actors operate, scaled detection of online abuse can reach near-perfect precision.

it goes on…

…

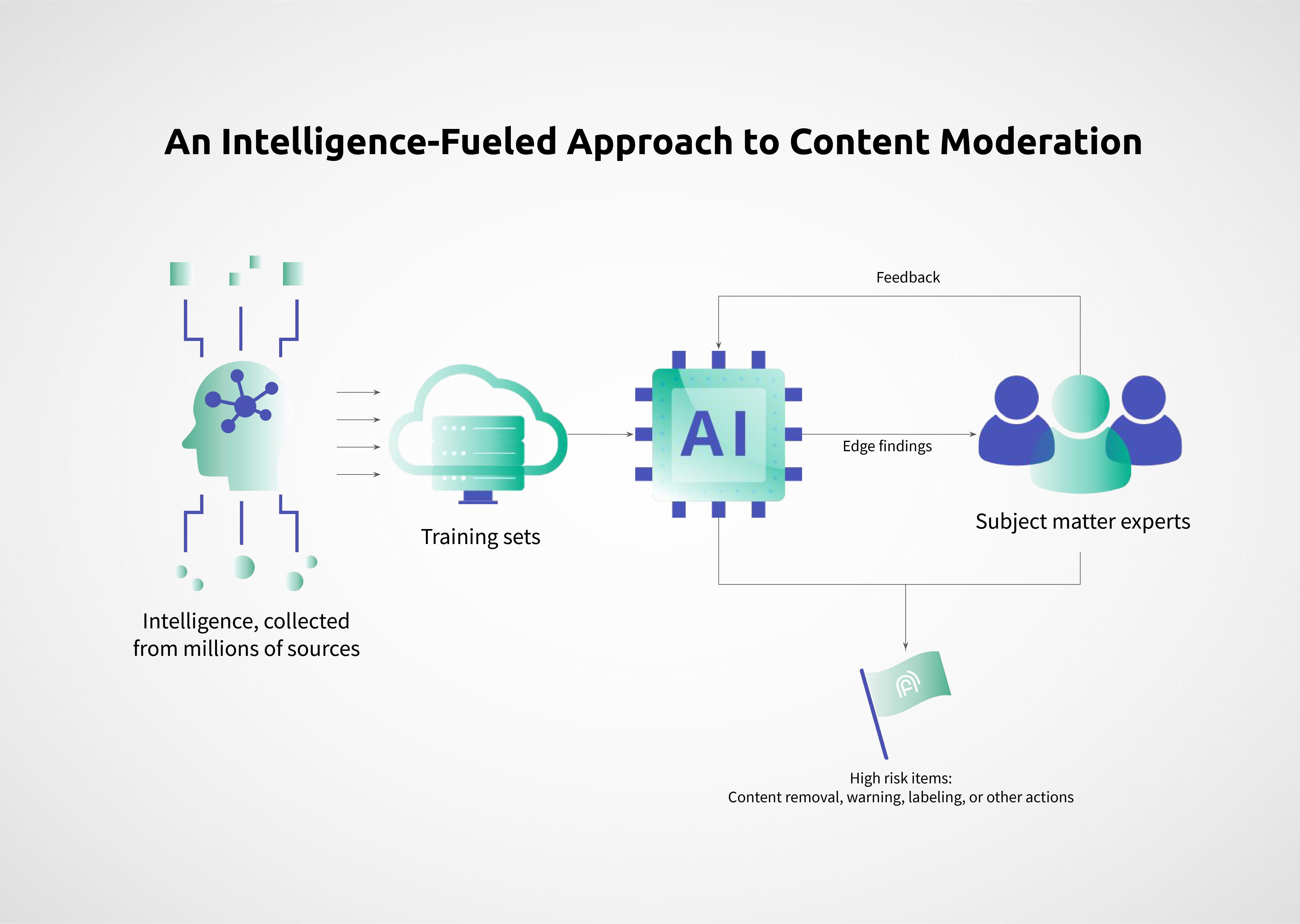

To overcome the barriers of traditional detection methodologies, we propose a new framework: rather than relying on AI to detect at scale and humans to review edge cases, an intelligence-based approach is crucial.

By bringing human-curated, multi-language, off-platform intelligence into learning sets, AI will then be able to detect nuanced, novel online abuses at scale, before they reach mainstream platforms. Supplementing this smarter automated detection with human expertise to review edge cases and identify false positives and negatives and then feeding those findings back into training sets will allow us to create AI with human intelligence baked in. This more intelligent AI gets more sophisticated with each moderation decision, eventually allowing near-perfect detection, at scale.

What could possibly go wrong?